For the benefit of my classmates in the UC Baseline program (see this earlier post to find out what it’s about), I’m posting a regular series of notes here on Global Nerdy to supplement the class material. As our instructor Tremere said, what’s covered in the class merely scratches the surface, and that we should use it as a launching point for our own independent study.

There was a lot of introductory material to cover on day one of the Hardware 101 portion of the program, and there’s one bit of basic but important material that I think deserves a closer look, especially for my fellow classmates who’ve never had to deal with it before: How binary and hexadecimal numbers are related.

The problem with binary

(for humans, anyway)

Consider the population of Florida. According to the U.S. Census Bureau, on July 1, 2019, that number was estimated to be 21,477,737 in base 10, a.k.a. the decimal system.

Here’s the same number, expressed in base 2, a.k.a. the binary system: 1010001111011100101101001.

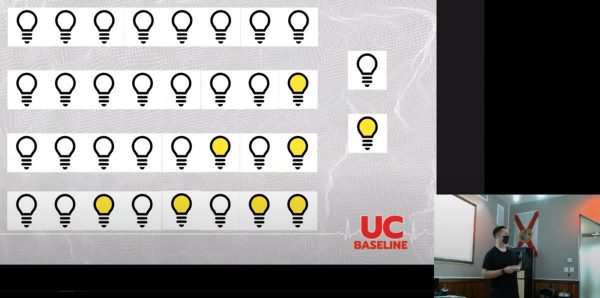

That’s the problem with binary numbers: Because they use only two digits, 0 and 1, they grow in length extremely quickly, which makes them hard for humans to read. Can you tell the difference between 100000000000000000000000 and 1000000000000000000000000? Be careful, because those two numbers are significantly different — one is twice the size of the other!

(Think about it: In the decimal system, you make a number ten times as large by tacking a 0 onto the end. For the exact same reason, tacking a 0 onto the end of binary number doubles that number.)

Hexadecimal is an easier way to write binary numbers

Once again, the problem is that:

- Binary numbers, because they use only two digits — 0 and 1 — get really long really quickly, and

- Decimal numbers don’t convert easily to binary.

What we need is a numerical system that:

- Can represent really big numbers with relatively few characters, and

- Converts easily to binary.

Luckily for us, there’s a numerical system that fits this description: Hexadecimal. The root words for hexadecimal are hexa (Greek for “six”) and decimal (from Latin for “ten”), and it means base 16.

Using 4 binary digits, you can represent the numbers 0 through 15:

| Decimal | Binary |

|---|---|

| 0 | 0000 |

| 1 | 0001 |

| 2 | 0010 |

| 3 | 0011 |

| 4 | 0100 |

| 5 | 0101 |

| 6 | 0110 |

| 7 | 0111 |

| 8 | 1000 |

| 9 | 1001 |

| 10 | 1010 |

| 11 | 1011 |

| 12 | 1100 |

| 13 | 1101 |

| 14 | 1110 |

| 15 | 1111 |

Hexadecimal is the answer to the question “What if we had a set of digits that represented the 16 numbers of 0 through 15?”

Let’s repeat the above table, this time with hexadecimal digits:

| Decimal | Binary | Hexadecimal |

|---|---|---|

| 0 | 0000 | 0 |

| 1 | 0001 | 1 |

| 2 | 0010 | 2 |

| 3 | 0011 | 3 |

| 4 | 0100 | 4 |

| 5 | 0101 | 5 |

| 6 | 0110 | 6 |

| 7 | 0111 | 7 |

| 8 | 1000 | 8 |

| 9 | 1001 | 9 |

| 10 | 1010 | A |

| 11 | 1011 | B |

| 12 | 1100 | C |

| 13 | 1101 | D |

| 14 | 1110 | E |

| 15 | 1111 | F |

Hexadecimal gives us easier-to-read numbers where each digit represents a group of 4 binary digits. Because of this, it’s easy to convert back and forth between binary and hexadecimal.

Since we’re creatures of base 10, we have the single characters to represent the digits 0 through 9, but no single character to represent 10, 11, 12, 13, 14, and 15, which are digits in hexadecimal. To work around this problem, hexadecimal uses the first 6 letters from the Roman alphabet: A, B, C, D, E, and F.

Let’s try representing a decimal number in binary, and then hexadecimal. Consider the number 49,833. It’s the number for the Unicode character for ©, the copyright symbol. Here’s its representation in binary:

1100001010101001

That’s a hard number to read, and if you had to manually enter it, the odds are pretty good that you’d make a mistake. Let’s convert it to its hexadecimal equivalent.

We do this by first breaking that binary number into groups of 4 bits (remember, a single hexadecimal number represents 4 bits, and “bit” is a portmanteau for “binary digit”):

1100 0010 1010 1001

Now let’s use the table above to look up the hexadecimal digit for each of those groups of 4:

1100 0010 1010 1001

C 2 A 9

There you have it:

- The decimal representation of the number is 49,833,

- its binary representation is 1100001010101001,

- in hexadecimal, it’s C2A9,

- and when you interpret this number as a Unicode character, it’s this: ©

How to indicate if you’re writing a number in decimal, binary, or hexadecimal form

Because we’re base 10 creatures, we simply write decimal numbers as-is:

49,833

To indicate that a number is in binary, we prefix it with the number zero followed by a lowercase b:

0b1100001010101001

This is a convention used in many programming languages. Try it for yourself in JavaScript:

# This will print "49833" in the console console.log(0b1100001010101001)

Or if you prefer, Python:

# This will print "49833" in the console print(0b1100001010101001)

To indicate that a number is in hexadecimal, we prefix it with the number zero followed by a lowercase x:

oxC2A9

Once again, try it for yourself in JavaScript:

# This will print "49833" in the console print(0xc2a9) print(0xC2A9)

Or Python:

# Both of these will print "49833" in the console print(0xc2a9) print(0xC2A9)

Common grouping of binary numbers and hexadecimal

4 bits: A half-byte, tetrade, or nybble

A single hexadecimal digit represents 4 bits, and my favorite term for a group of 4 bits is nybble. The 4 bits that make up a nybble can represent the numbers 0 through 15.

“Nybble” is one of those computer science-y jokes that’s based on the fact that a group of 8 bits is called a byte. I’ve seen the terms half-byte and tetrade also used.

8 bits: A byte

Two hexadecimal digits represent 8 bits, and a group of 8 bits is called a byte. The 8 bits that make up a byte can represent the numbers 0 through 255, or the numbers -128 through 127.

In the era of the first general-purpose microprocessors, the data bus was 8 bits wide, and so byte was the standard unit of data. Every character in the ASCII character set can be expressed in a single byte. Each of the 4 numbers in an IPv4 address is a byte.

16 bits: A word

Four hexadecimal digits represent 16 bits, and a group of 16 bits is most often called a word. The 16 bits that make up a word can represent the numbers 0 through 65,535 (a number sometimes referred to as “64K”), or the numbers -32,768 through 32,767.

If you were computing in the late ’80s or early ’90s — the era covered by Windows 1 through 3 or Macs in the classic chassis — you were using a 16-bit machine. That meant that it stored data a word at a time.

32 bits: A double word or DWORD

Eight hexadecimal digits represent 32 bits, and a group of 32 bits is often called a double word or DWORD; I’ve also heard the unimaginative term “32-bit word”. The 32 bits that make up a word can represent the numbers 0 through 4,294,967,295 (a number sometimes referred to as “4 gigs”), or the numbers −2,147,483,648 through 2,147,483,647.

32-bit operating systems and computers came about in the mid-1990s. Some are still in use today, although they’d now be considered older or “legacy” systems.

The IPv4 address system uses 32 bits, which means that it can represent a maximum of 4,294,967,29 internet addresses. That’s fewer addresses than there are people on earth, and as you might expect, we’re running out of these addresses. There are all manner of workarounds, but the real solution is for everyone to switch to IPv6, which uses 128 bits, which allows for over 3 × 1038 addresses — enough to assign 100 addresses to every atom on the surface of the earth.

64 bits: A quadruple word or QWORD

16 hexadecimal digits represent 64 bits, and a group of 64 bits is often called a quadruple word, quad word, or QWORD; I’ve also heard the unimaginative term “64-bit word”. The 64 bits that make up a word can represent the numbers 0 through 18,446,744,073,709,551,615 (about 18.4 quintillion), or the numbers -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807 (minus 9.2 quintillion through 9.2 quintillion).

If you have a Mac and it dates from 2007 or later, it’s probably a 64-bit machine. macOS has supported 32- and 64-bit applications, but from macOS Catalina (which came out in 2019) onward, it’s 64-bit only. As for Windows-based machines, if your processor is an Intel Core 2/i3/i5/i7/i9 or AMD Athlon 64/Opteron/Sempron/Turion 64/Phenom/Athlon II/Phenom II/FX/Ryzen/Epyc, you have a 64-bit processor.

Need more explanation?

The Khan Academy has a pretty good explainer of the decimal, binary, and hexadecimal number systems: