From The Intercept’s article, The Internet’s New Favorite AI Proposes Torturing Iranians and Surveilling Mosques:

To AI’s boosters — particularly those who stand to make a lot of money from it — concerns about bias and real-world harm are bad for business. Some dismiss critics as little more than clueless skeptics or luddites, while others, like famed venture capitalist Marc Andreessen, have taken a more radical turn following ChatGPT’s launch. Along with a batch of his associates, Andreessen, a longtime investor in AI companies and general proponent of mechanizing society, has spent the past several days in a state of general self-delight, sharing entertaining ChatGPT results on his Twitter timeline.RelatedHow Big Tech Manipulates Academia to Avoid Regulation

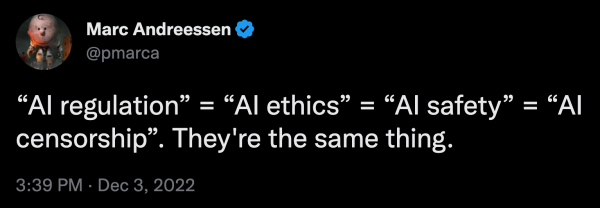

The criticisms of ChatGPT pushed Andreessen beyond his longtime position that Silicon Valley ought only to be celebrated, not scrutinized. The simple presence of ethical thinking about AI, he said, ought to be regarded as a form of censorship. “‘AI regulation’ = ‘AI ethics’ = ‘AI safety’ = ‘AI censorship,’” he wrote in a December 3 tweet. “AI is a tool for use by people,” he added two minutes later. “Censoring AI = censoring people.” It’s a radically pro-business stance even by the free market tastes of venture capital, one that suggests food inspectors keeping tainted meat out of your fridge amounts to censorship as well.

As much as Andreessen, OpenAI, and ChatGPT itself may all want us to believe it, even the smartest chatbot is closer to a highly sophisticated Magic 8 Ball than it is to a real person. And it’s people, not bots, who stand to suffer when “safety” is synonymous with censorship, and concern for a real-life Ali Mohammad [a hypothetical higher-risk person that ChatGPT created as an example] is seen as a roadblock before innovation.