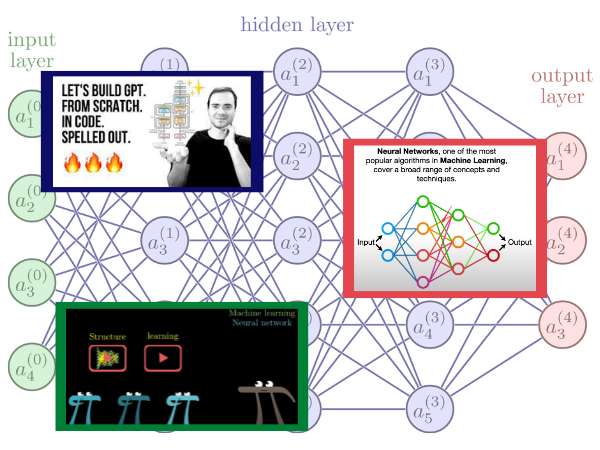

If the recent popularity of ChatGPT and other recent AI advancements has got you interested in neural networks, how they work, and how you might implement one, these three video series should help you get started.

Note that while you might get some basic insights into neural networks by simply watching the videos, the real benefit comes from doing the exercises shown in the video and experimenting with the math and programming they feature.

Neural networks from a general point of view

If you’re new to neural networks, you’ll want to start with the Neural Networks video series from Grant Sanderson, whose YouTube channel, 3Blue1Brown, is a great place to learn math and algorithms.

- Number of videos in the playlist: 4

- Total length: 1 hour, 4 minutes

- Published: October – November 2017

- Math difficulty: 1 out of 5 in the first video, up to about 3 out of 5 in the last two. You can’t do neural networks without linear algebra and differential calculus, but this series works hard at making them as easy to understand as possible.

- Programming difficulty: 0 out of 5. This is all about the general principles behind neural networks and doesn’t cover any programming at all.

Neural networks from a math point of view

Ever wish you had a math teacher who can make complex topics easier to understand with fun explanations and ukulele music breaks? You do now — Josh Starmer, host of the StatQuest YouTube channel, is that math teacher, and his Neural Networks / Deep Learning video series looks deep into the math behind neural networks.

- Number of videos in the playlist: 20

- Total length: 5 hours, 28 minutes

- Published: August 2020 – January 2023

- Math difficulty: 0 out of 5 in the intro video, working its way up to 3 out of 5 as the series progresses. Once again, linear algebra and differential calculus are involved, but like 3Blue1Brown’s Grant Sanderson, Josh Starmer does a lot to make math concepts easier to understand.

- Programming difficulty: 0 out of 5, until the last three videos, which cover using Python and the PyTorch library. If you’re comfortable with OOP, you’ll do fine.

Neural networks from a Python programmer’s point of view

Are you a Python programmer? Would you like to learn neural networks from the co-founder of OpenAI and the former director of artificial intelligence for Tesla? Then Andrej Karpathy’s Neural Networks: Zero to Hero video series is for you. He says that all you need to understand his video is a “basic knowledge of Python and a vague recollection of calculus from high school,” but you should keep in mind that this is someone who eats, sleeps, and breathes neural networks and Python. I’m currently working my way through these videos, and if I can follow them, chances are that you can too.

- Number of videos in the playlist: 20

- Total length: 12 hours, 23 minutes

- Published: August 2022 – January 2023

- Math difficulty: 3 out of 5, as there’s what Karpathy calls “high school calculus.” If you took first-year calculus in university and remember the basics of differential calculus — including the bit where dy/dx doesn’t mean you can simply cancel out the d’s — you’ll be fine.

- Programming difficulty: 3.5 out of 5. You’ve got to be comfortable with OOP, lambda functions, and recursion for most of the videos, and in the final video, Let’s Build GPT, you should be familiar with PyTorch. You should also have Jupyter Notebook or one of its variants set up.