I decided to try out ChatGPT’s newly-released GPT-4o model (the lowercase “o” stands for “omni”) by uploading images and asking it to describe them to me.

After this quick test, my impression is simply “wow.”

Image 1: Me and Steven Bosco playing together at the Anaconda party at PyCon US 2022

Me: What’s happening in this picture?

GPT-4o: The picture shows two musicians performing on stage. One musician is playing an accordion, and the other is playing an acoustic guitar and singing into a microphone. The guitarist is wearing a cowboy hat. In the background, there is a banner with the name “Steven Bosco” and a QR code for tips via Venmo and CashApp. The setting appears to be an indoor venue with a rustic, cozy ambiance. There is a small audience in the foreground, and the atmosphere looks lively and engaging.

Not bad. I was impressed that ChatGPT was able to determine that the banner read “Steven Bosco” even though my head clearly blocks the “B” — it would have had to determine the “Bosco” from the tip sign. I was also impressed by its description of the “feel” of the room.

Image 2: A comic I drew as a storyboard for an app about authentication and authorization

Me: What is being depicted in this image?

GPT-4o: The image is a two-panel cartoon.

Panel 1:

- The setting is a ship’s engine room filled with various controls and equipment.

- There is a large machine, a computer, and a control panel with levers.

- The text reads: “A ship’s engine room is full of important controls! If you don’t manage access to these controls, things can go very wrong, very quickly!”

Panel 2:

- The scene shows a cat wearing a chef’s hat and holding a spoon, standing outside a door labeled “ENGINE ROOM.”

- Next to the door is a sign that reads: “CHEF ACCESS DENIED.”

- The cat is saying, “But I wanted to play with the big red button!”

- The text reads: “For safety’s sake, the ship’s fine-grained authorization allows only engineers and the command crew inside the engine room.”

The cartoon humorously illustrates the importance of restricting access to critical areas on a ship, such as the engine room, to prevent unauthorized personnel from tampering with important controls.

In case you were wondering, I wrote about the comic in this article.

I wanted to see how well GPT-4o does with handwriting. I’m told that my penmanship is unusually readable; results with other people’s handwriting are bound to vary.

I was impressed by how it identified the image not just as a comic, but one with two panels. Even more impressive: that ChatGPT got the general gist of the comic, right down to its use of humor to illustrate its point.

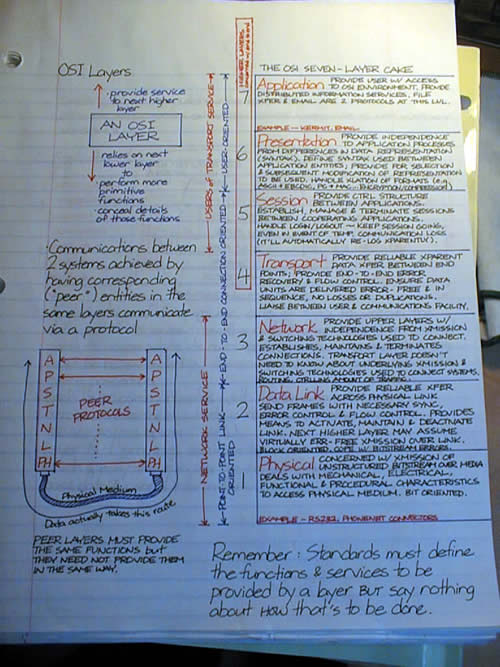

Image 3: My notes from my computer networking class at Crazy Go Nuts University

Me: What’s this image about?

GPT-4o: The image is a detailed handwritten study guide about the OSI (Open Systems Interconnection) model, often referred to as the OSI seven-layer model or OSI seven-layer cake. The OSI model is used to standardize networking functions to support interoperability among different products and software.

The guide includes the following:

Left Side:

- An explanation of OSI layers and their function.

- “Provide service to the layer higher.”

- “Relies on next lower layer to perform.”

- A diagram showing the communication between two systems, with peer protocols between corresponding layers.

- A reminder: “Peer layers must provide the same functions, but they need not provide them in the same way.”

Right Side:

- A detailed description of each of the seven OSI layers:

- Physical Layer: Deals with the physical connection between devices, transmission of raw bit streams over a physical medium.

- Data Link Layer: Ensures error-free transmission between two directly connected nodes, managing frame synchronization, error control, and flow control.

- Network Layer: Manages data routing, forwarding, addressing, and packet switching.

- Transport Layer: Provides reliable data transfer services to the upper layers, including flow control, error correction, and segmentation.

- Session Layer: Manages sessions between applications, providing services like establishment, maintenance, and termination of connections.

- Presentation Layer: Translates data between the application layer and the network, handling data encryption, decryption, and compression.

- Application Layer: Provides network services directly to end-user applications, including email, file transfer, and network management.

- A final note: “Remember: Standards must define the functions & services to be provided by a layer but say nothing about how that’s to be done.”

This guide is likely intended for students or professionals studying networking concepts, providing a clear and concise overview of the OSI model and its layers.

The challenge with this one was the low resolution of the photo. I took it with my late ’90s-era camera with its potato-fidelity 1024 * 768 resolution (and that was its “high-quality” mode — normal mode was 640 * 480!).

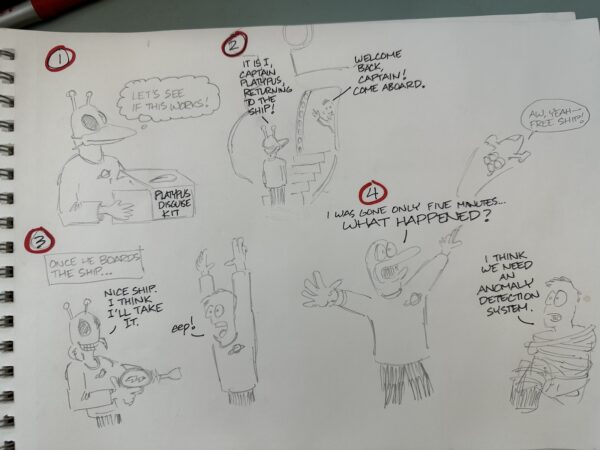

Image 4: Another comic I drew as a storyboard for that app about authentication and authorization

Me: Describe this image, please.

GPT-4o: This image is a four-panel cartoon strip that humorously depicts a situation involving an alien, a disguise kit, and a spaceship. Here’s a description of each panel:

Panel 1:

- A character with antennae and a space uniform is holding a box labeled “PLATYPUS DISGUISE KIT.”

- The character is thinking, “Let’s see if this works!”

Panel 2:

- The same character, now disguised with a platypus head, is returning to a spaceship.

- The character says, “It is I, Captain Platypus, returning to the ship!”

- Another character on the ship welcomes him, saying, “Welcome back, Captain! Come aboard.”

- In the background, someone is seen thinking, “Aw, yeah—free ship!”

Panel 3:

- The disguised character is now onboard the ship.

- The text reads: “Once he boards the ship…”

- The disguised character says, “Nice ship. I think I’ll take it.”

- Another character on the ship is startled and says, “eep!”

Panel 4:

- The real captain, presumably Captain Platypus, returns and is shocked by the situation.

- He exclaims, “I was gone only five minutes… WHAT HAPPENED?”

- Another character, who appears to be tied up, responds, “I think we need an anomaly detection system.”

The cartoon humorously highlights the importance of security and the potential consequences of not having proper identification and detection systems in place on a spaceship.

This is also a comic from this article.

One of the challenges with this image is that it’s a four-panel comic, but there are no lines separating the panels — just white space. Following only the circled numbers specifying the order in which the comic should be read, GPT-4o “got” the comic, including the fact that the character in panel 1 was impersonating Captain Platypus.

GPT-4o got one thing wrong — it thought that the “Aw, yeah — free ship!” line is from panel 2; it’s the alien stealing the ship in panel 4.