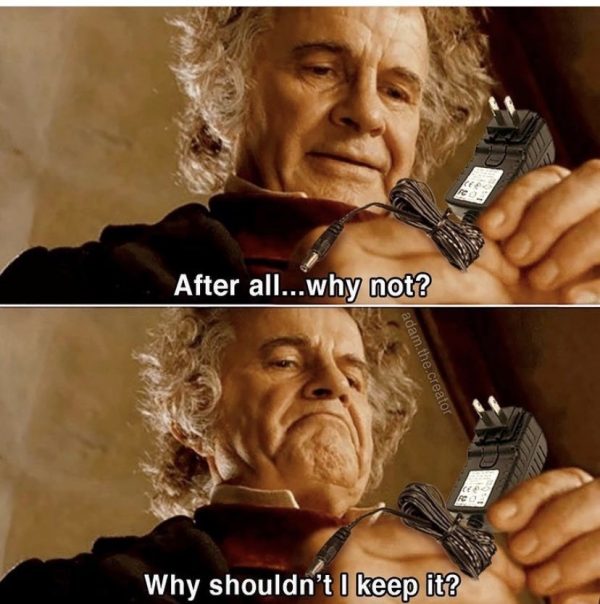

If you’re like most people, you probably have a collection of old power adapters and chargers that you’ve held onto, even though the devices they used to power are long gone. You probably thought that someday, one of them might come in handy:

This article will help you figure out if an adapter is compatible with a given device.

A little terminology

Before we begin, let’s make sure we’re using the same words to refer to the different “plugs” on an adapter or charger…

- By plug, I mean the part of the adapter or charger that you plug into the wall.

- By connector, I mean the part of the adapter or charger that you plug into the device.

With that out of the way, let’s begin!

How to tell if a power adapter or charger is right for your device

Step 1: Is the adapter’s polarity correct for your device?

Although you could do steps 1 and 2 in either order, I prefer to get the “device killer” question out of the way first. That question is: Does the connector’s polarity match the device’s polarity? Simply put, you want to find out which part of the connector is positive and which part is negative.

In DC current, which is the kind of current that an adapter provides, the polarity determines the direction in which current will flow through the device. You do not want current to flow into your device in the reverse direction.

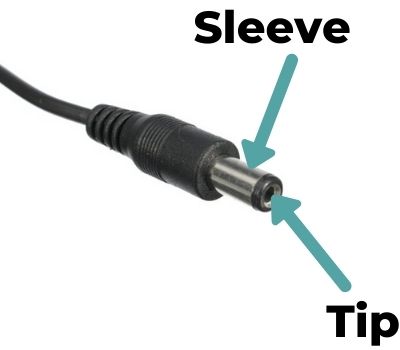

Here’s a connector and its parts. The sleeve is the outer metal part, while the tip is the inner metal part:

Both your adapter and device should have some kind of label or tag that indicates their polarity. It should be either negative sleeve/positive tip, which is indicated by this symbol…

…or positive sleeve/negative tip, which is indicated by this symbol:

Are the polarity markings on both the adapter and the device are the same?

- Yes: If the polarity markings on both are the same, you can proceed to the next step.

- No: If the polarity markings are different, DO NOT proceed to the next step, and definitely DO NOT plug the connector into the device.

- If the are no polarity markings on the adapter: See the SPECIAL BONUS SECTION at the end of this article.

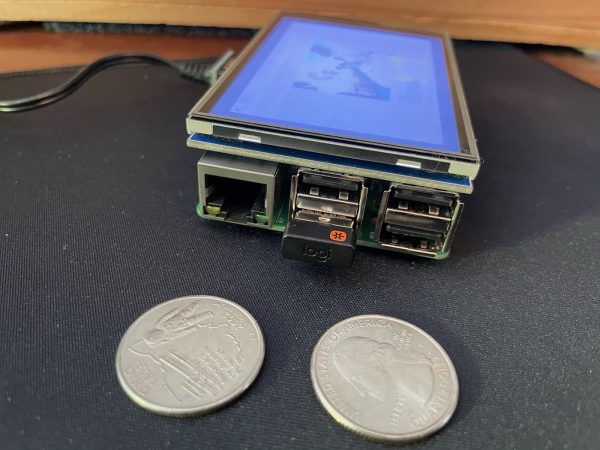

Step 2: Does the adapter’s connector fit into your device?

With the adapter’s plug NOT plugged into an outlet, can you plug the connector into the device?

- Yes: If the connector fits, you can proceed to the next step.

- No: If the connector doesn’t even fit, you can be pretty certain that this adapter isn’t going to work for the device.

Step 3: Do the voltage and current coming from the adapter match the voltage and current required by the device?

If you’ve reached this step, you’ve now taken care of the simple matches: The adapter will push current into your device in the right direction, and the connector fits.

Now it’s time to look at the numbers, namely voltage and current.

- Look at the voltage (measured in volts, or V for short) and current (measured in amperes, or amps or A for short) marked on the adapter.

- Look at the same values marked on the device.

Do the voltage and current values on the adapter and device match?

- Yes: If the numbers match, you’re good! You can use the adapter to power the device.

- No, both numbers don’t match: Don’t use the adapter to power the device.

- No, one of the numbers matches, and one doesn’t: If only one of the numbers doesn’t match, don’t write off the adapter as incompatible yet. Consult the table below:

| …and it’s LOWER than what your device needs | …and it’s HIGHER than what your device needs | |

| If the voltage (V) doesn’t match… |

MMMMAYBE. Your device might work, but it also might work unreliably. Simpler devices, where electricity is converted directly into some kind of result (such as a light, or a speaker) are more likely to work than more complex ones (such as a hard drive, or anything with a processor). |

NO! WILL PROBABLY RUIN YOUR DEVICE. Your device might work. The additional voltage may overheat and damage your device. |

| If the current (A) doesn’t match… |

NO! WILL PROBABLY RUIN YOUR ADAPTER. Your device might work. Your device will attempt to draw more current than the adapter is rated for, which may overheat and damage the adapter. |

GO FOR IT! The adapter’s current rating states the maximum that it’s capable of delivering. Your device will work. It will draw only the current it needs from the adapter. |

SPECIAL BONUS SECTION:

What if the adapter doesn’t have polarity markings?

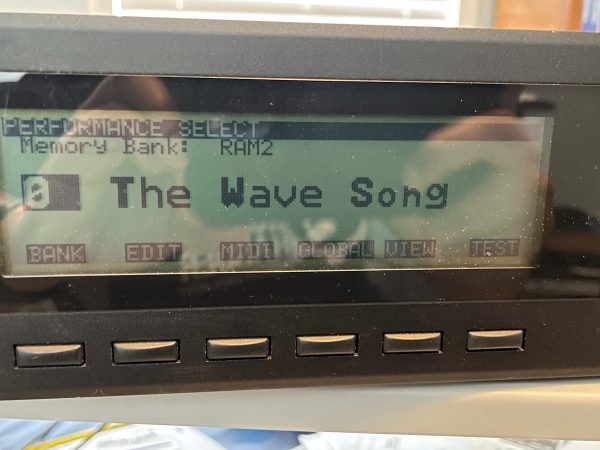

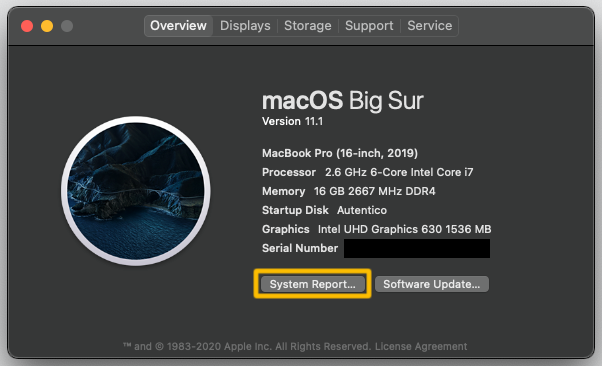

Believe it or not, it happens. In fact, I have one such adapter, pictured below:

Tap to view at full size.

As you can see, its label section lists a lot of information, but not the polarity. This means you’ll have to determine the polarity yourself, or you can take a leap of faith.

If you want to determine the adapter’s polarity yourself

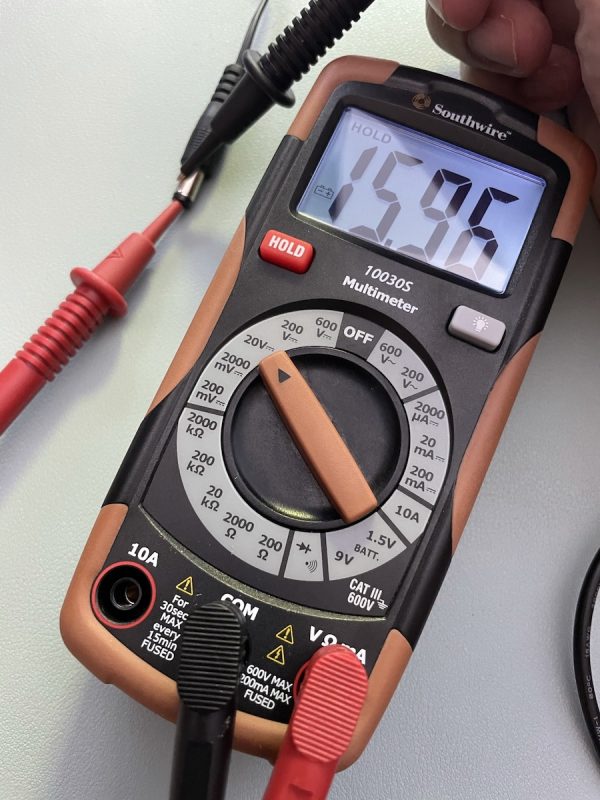

If you want to determine the polarity yourself, you’ll need a voltmeter. Set it up to read DC voltage in the range of the adapter. In the case of the adapter above, it’s rated to output 12 volts (V), so I set my meter to read a maximum of 20 V. I put the positive probe inside the connector so that it made contact with the tip, and touched the negative probe to the sleeve. A positive number appeared on the display:

With the positive probe touching the tip and the negative probe touching the sleeve, a positive voltage means that current is flowing from the tip to the sleeve, which in turn means that the tip is positive and the sleeve is negative.

If the number were negative, it would means that current was flowing from the sleeve to the tip, which in turn means that the sleeve is positive and the tip is negative.

In fact, when I put the positive probe on the sleeve and the negative probe on the tip of the same adapter, this is what happened:

Note that the voltage reported is negative. In other words, the current appears to be flowing backwards — from the negative probe to the positive probe —because I had the probes backwards. Once again, this indicates that current is flowing from the tip to the sleeve, which means that the tip is positive and the sleeve is negative.

If you want to take a leap of faith

If you don’t have a multimeter handy, you can always take a leap of faith and assume that your adapter has a positive tip and a negative sleeve, which is how most adapters are designed. The tip is well-protected and difficult to touch by accident. Since current flows from positive to negative, you prevent accidental shorts and electrocution by making the hard-to-reach tip positive and the easy-to-reach sleeve negative.