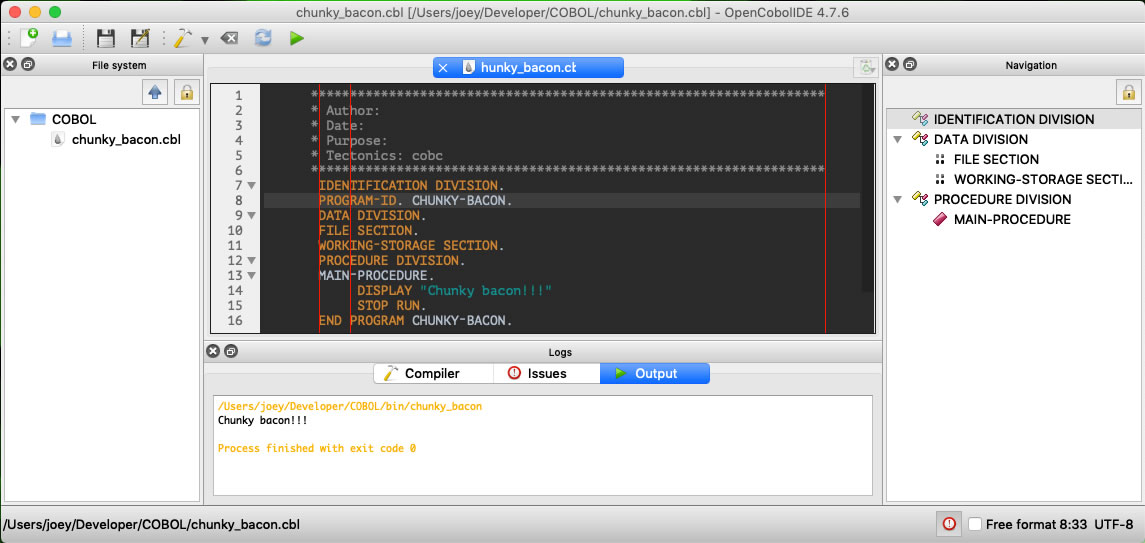

OpenCobolIDE running on my MacBook Pro. Tap the screen shot to see it at full size.

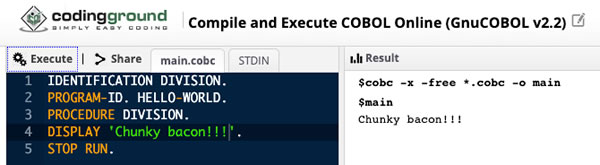

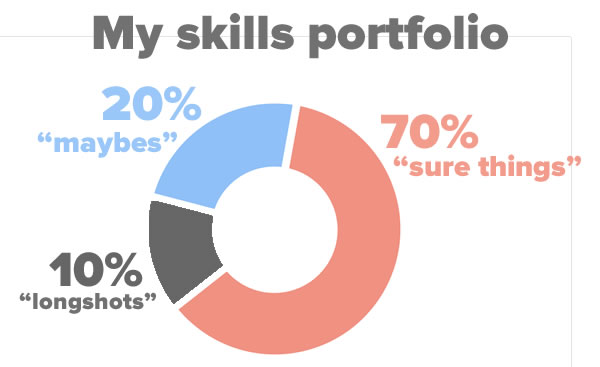

In an earlier post, I played around with an online COBOL compiler. Seeing as I’m a COVID-19 unemployment statistic and there’s a call for COBOL developers to help shore up ancient programs that are supposed to be issuing relief checks, I’ve decided to devote a little more time next week (this week, I have to finish revising a book) to playing with the ancient programming language. I’ll write about my experiences here, and I’ll also post some videos on YouTube.

If you want to try your hand at COBOL on the Mac, you’re in luck: it’s a lot easier than I expected it would be!

Get the compiler: GnuCOBOL

COBOL isn’t used much outside enterprise environments, which means that COBOL compilers and IDEs are sold at enterprise prices. If you’re an individual programmer without the backing of a company with a budget to pay for developer tools, your only real option is GnuCOBOL.

On macOS, the simplest way to install GnuCOBOL is to use Homebrew.

If Homebrew isn’t already installed on your system (and seriously, you should have it if you’re using your Mac as a development machine), open a terminal window and enter this to install it:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)"

If Homebrew is installed on your system, first make sure that it’s up to date by using this command in a terminal window:

brew update

Then install GnuCOBOL by entering the following:

brew install gnu-cobol

Once that’s done, GnuCOBOL should be on your system under the name cobc. You can confirm that it’s on your system with the following command…

cobc -v

…which should result in a message like this:

cobc (GnuCOBOL) 2.2.0

Built Aug 20 2018 15:48:14 Packaged Sep 06 2017 18:48:43 UTC

C version "4.2.1 Compatible Apple LLVM 10.0.0 (clang-1000.10.43.1)"

loading standard configuration file 'default.conf'

cobc: error: no input files

Get the IDE: OpenCobolIDE

![]() Unless you’ve got some way to configure your text editor to deal with the language’s quirks, you really want to use an IDE when coding in COBOL. Once again, an open source project comes to the rescue: OpenCobolIDE.

Unless you’ve got some way to configure your text editor to deal with the language’s quirks, you really want to use an IDE when coding in COBOL. Once again, an open source project comes to the rescue: OpenCobolIDE.

OpenCobolIDE relies on Python 3, so make sure you’ve installed Python 3 before installing OpenCobolIDE. I installed it on my computer by installing the Python 3 version of Anaconda Individual Edition.

If Python 3 is already on your system, you have a couple of options for installing OpenCobolIDE:

- Installing OpenCobolIDE using the Python 3 package installer, pip3, which gives you a program that you launch via the command line. This gives you OpenCobolIDE version 4.7.6.

- Downloading the .dmg disk image file, which gives you an app lives in the Applications folder and which you launch by clicking an icon. This gives you OpenCobolIDE version 4.7.4.

I strongly recommend going with option 1. OpenCobolIDE is no longer maintained, so you might as well go with the latest version, which you can only get by installing it using Homebrew. Version 4.7.6 has a couple of key additional features that you’ll find handy, including:

- Support for all the COBOL keywords in GnuCOBOL 2.x. This is a big deal in COBOL, which has something in the area of 400 reserved words. For comparison, C and Python have fewer than 40 reserved words each.

- Better indentation support (and you want that in COBOL, thanks to its ridiculous column rules from the 1960s).

- Support for compiler flags like

-Wand-Wall— and hey, warning flags are useful!

To install OpenCobolIDE using the Python 3 package installer, pip3, enter the following in a terminal window:

pip3 install OpenCobolIDE --upgrade

To launch OpenCobolIDE, enter this:

OpenCobolIDE

You’ll be greeted with this window:

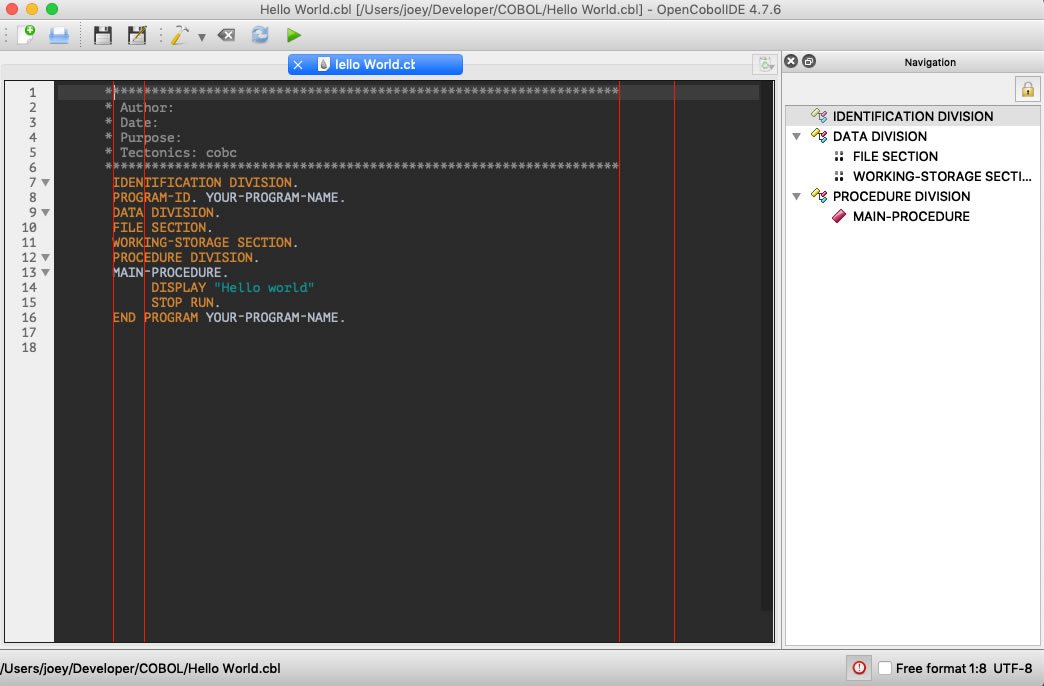

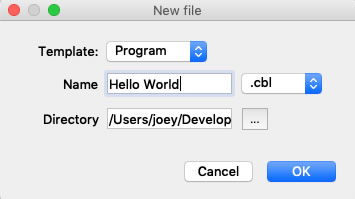

Tap New file. You’ll see this:

For Template, select Program, enter the name and location for your program file, and tap OK.

You should see this:

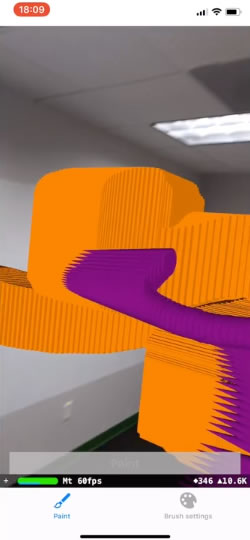

Tap the screen shot to see it at full size.

Don’t mistake those red vertical lines for glitches. They’re column guides. COBOL is from the days of punched cards, and is one of those programming languages that’s really fussy about columns:

- The first 6 columns are reserved for sequence numbers.

- Column 7 is reserved for a line continuation character, an asterisk (which denotes a comment) or another special character.

- Columns 8 through 72 are for code, and are broken down into 2 zones:

- Area A: Columns 8 through 11, which are used for DIVISIONS, SECTIONS, and PARAGRAPHS, as well as specifying levels 01 through 77 (COBOL is weird).

- Area B: Columns 12 through 72, which is for the rest of the code.

- Columns 73 through 80 make up the “identification” area and are ignored by the compiler. It’s useful for very short comments along the lines of “TODO” or “HACK”.

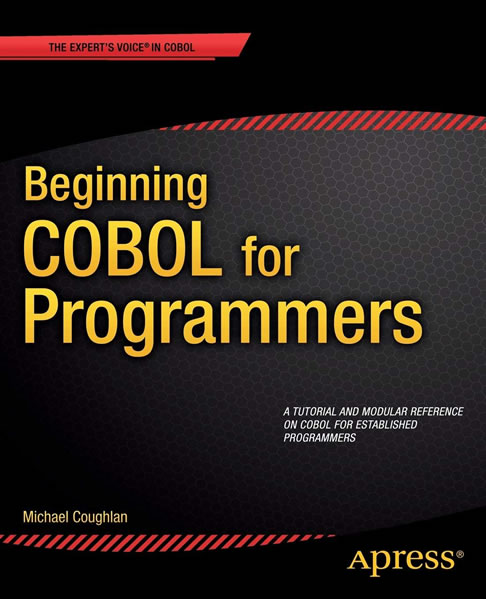

Get the book: Beginning Cobol for Programmers

There aren’t many current books on COBOL out there. Apress’ Beginning COBOL for Programmers is probably the best of the bunch, and unlike many old COBOL books, makes sense to developers with a solid grounding in modern programming languages.

The ebook is available for US$49.99, but if you use the coupon code SPRING20A by the end of Thursday, April 16, you can get a $20 discount, reducing the price to $29.99. If you want the book for this price, take action before it’s too late!

Are you looking for someone with both strong development and “soft” skills? Someone who’s comfortable either being in a team of developers or leading one? Someone who can handle code, coders, and customers? Someone who can clearly communicate with both humans and technology? Someone who can pick up COBOL well enough to write useful articles about it on short notice? The first step in finding this person is to check out my LinkedIn profile.