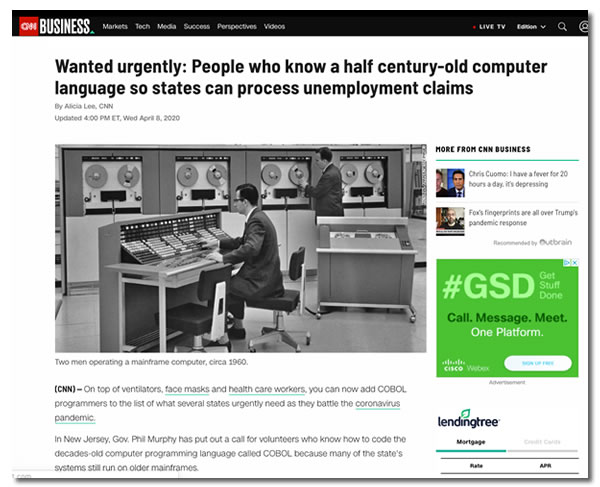

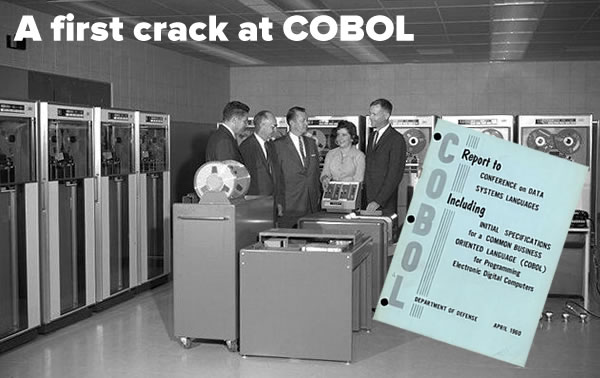

It’s been an age since I last played with COBOL. The last time I got to noodle with it was on a terminal in the math building at my alma mater, Queen’s University. The terminal was hooked up to a large time-sharing system running software that couldn’t be run on my computer at the time — a 640K PC-XT made by Ogivar, which was once the top PC manufacturer in Canada — but could probably be handled by even the bottom-of-the-line laptop at Best Buy running a copy of Ubuntu Linux.

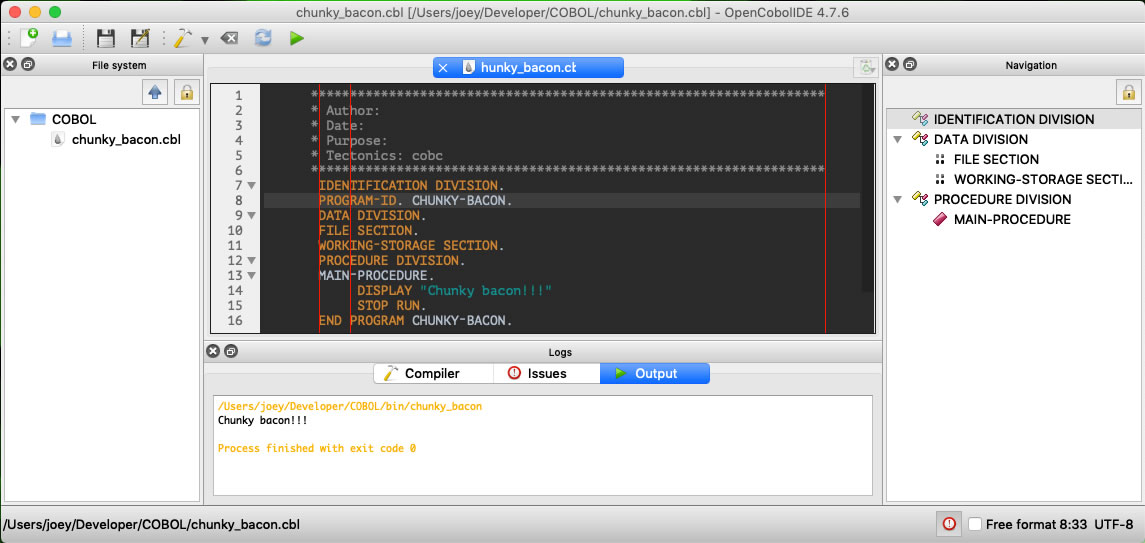

I wrote my first COBOL program in a long time today: Stupid Interest Calculator. It’s not unlike an old starter assignment from an “Intro to COBOL” course that a university in the late ’70s and early ’80s would put on the curriculum.

******************************************************************

*

* Stupid Interest Calculator

* ==========================

*

* A sample COBOL app to demonstrate the programming language

* and make me doubt that I’m living in the 21st century.

*

******************************************************************

IDENTIFICATION DIVISION.

PROGRAM-ID. STUPID-INTEREST-CALCULATOR.

DATA DIVISION.

FILE SECTION.

WORKING-STORAGE SECTION.

* In COBOL, you declare variables in the WORKING-STORAGE section.

* Let’s declare a string variable for the user’s name.

* The string will be 20 characters in size.

77 USER-NAME PIC A(20).

* Simple one-character throwaway string variable that we’ll use

* jusr to allow the user to press ENTER to end program.

77 ENTER-KEY PIC A(1).

* The standard input variables for calculating interest.

* The principal will be a 6-digit whole number, while

* the interest rate and years will be 2-digit whole numbers.

77 PRINCIPAL PIC 9(6).

77 INTEREST-RATE PIC 9(2).

77 YEARS PIC 9(2).

* And finally, variables to hold the results. Both will be

* 5-figure numbers with 2 decimal places.

77 SIMPLE-INTEREST PIC 9(5).99.

77 COMPOUND-INTEREST PIC 9(5).99.

PROCEDURE DIVISION.

* Actual code goes here!

MAIN-PROCEDURE.

PERFORM GET-NAME

PERFORM GET-LOAN-INFO

PERFORM CALCULATE-INTEREST

PERFORM SHOW-RESULTS

GOBACK.

* Get the user’s name, just to demonstrate getting a string

* value via keyboard input and storing it in a variable.

GET-NAME.

DISPLAY "Welcome to Bank of Murica!"

DISPLAY "What's your name?"

ACCEPT USER-NAME

DISPLAY "Hello, " USER-NAME "!".

* Get the necessary info to perform an interest calculation.

GET-LOAN-INFO.

DISPLAY "What is the principal of your loan?"

ACCEPT PRINCIPAL

DISPLAY "What is the interest rate (in %)"

ACCEPT INTEREST-RATE

DISPLAY "How many years will you need to pay off the loan?"

ACCEPT YEARS.

* Do what who-knows-how-many lines of COBOL have been

* doing for decades, and for about 95% of all ATM transactions.

CALCULATE-INTEREST.

COMPUTE SIMPLE-INTEREST = PRINCIPAL +

((PRINCIPAL * YEARS * INTEREST-RATE) / 100) -

PRINCIPAL

COMPUTE COMPOUND-INTEREST = PRINCIPAL *

(1 + (INTEREST-RATE / 100)) ** YEARS -

PRINCIPAL.

SHOW-RESULTS.

DISPLAY "Here’s what you'll have to pay back."

DISPLAY "With simple interest: " SIMPLE-INTEREST

DISPLAY "With compound interest: " COMPOUND-INTEREST

DISPLAY " "

DISPLAY "Press ENTER to end."

ACCEPT ENTER-KEY.

* Yes, this needs to be here, and the name of the program

* must match the name specified in the PROGRAM-ID line

* at the start of the program, or COBOL will throw a hissy fit.

END PROGRAM STUPID-INTEREST-CALCULATOR.

Just look at that beast. It’s got all the marks of a programming language that came about in the era of punch cards, teletype terminals, and all the other accoutrements of computing in the Mad Men era. Note the way variables are defined, procedures without parameters or local variables, ALL-CAPS, and clunky keywords like PERFORM to call subroutines and COMPUTE to assign the result of a calculation to a variable.

Here’s the output from a sample run:

Welcome to Bank of Murica! What's your name? Joey Hello, Joey ! What is the principal of your loan? 10000 What is the interest rate (in %) 18 How many years will you need to pay off the loan? 5 Here’s what you'll have to pay back. With simple interest: 09000.00 With compound interest: 12877.57 Press ENTER to end.

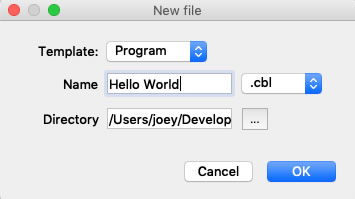

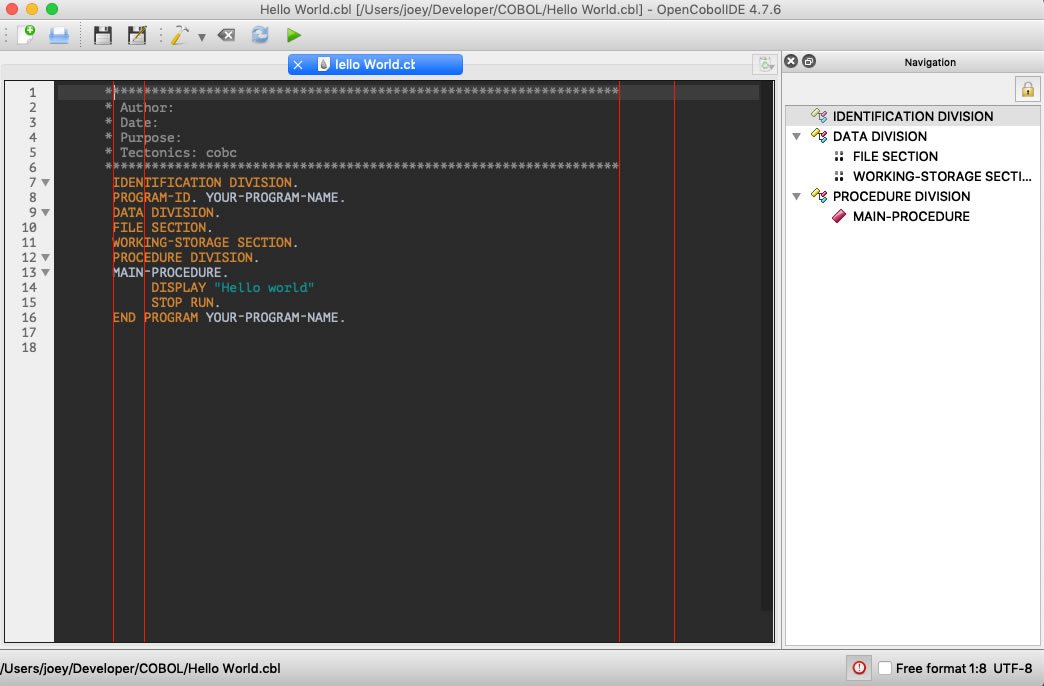

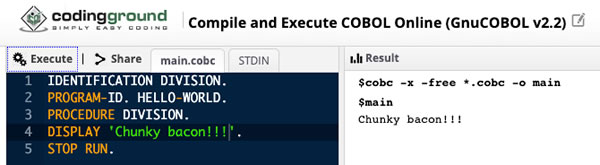

I’ll go over this app in more detail in an upcoming post. In the meantime, if curiosity or boredom got the better of you and you followed the instructions in an earlier post of mine and downloaded GnuCobol and OpenCobolIDE for macOS, you can either enter the code above or download the file and take it for a spin (1KB source code file, zipped).

Are you looking for someone with both strong development and “soft” skills? Someone who’s comfortable either being in a team of developers or leading one? Someone who can handle code, coders, and customers? Someone who can clearly communicate with both humans and technology? Someone who can pick up COBOL well enough to write useful articles about it on short notice? The first step in finding this person is to check out my LinkedIn profile.