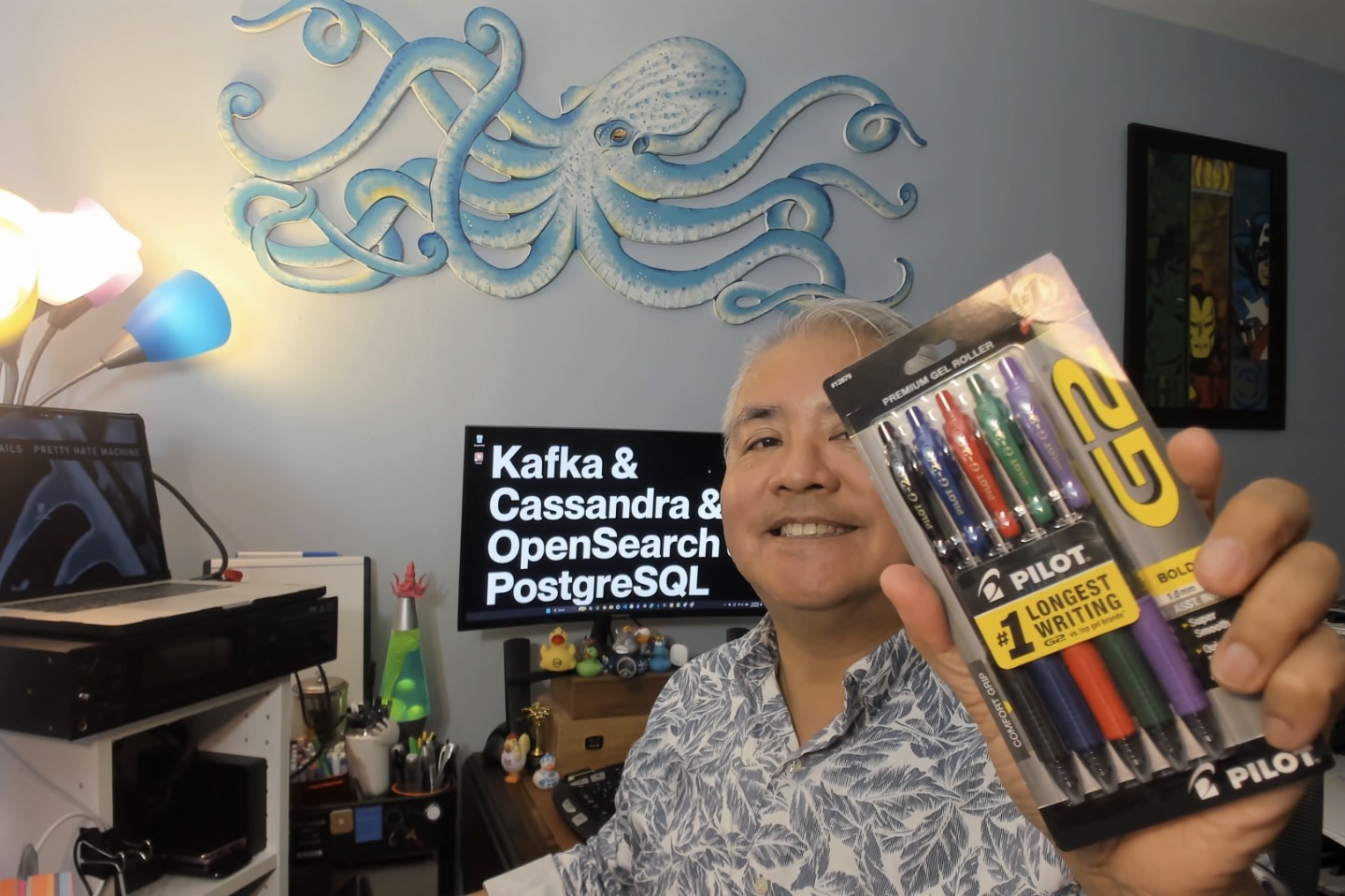

The newest video on the Global Nerdy YouTube channel is now online! It’s called A Fake Recruiter Tried to Scam Me — I Caught Him Using ChatGPT. Watch it now!

It’s the story of how a scammer posing as an executive recruiter tried to con me out of hundreds (and possibly thousands) of dollars using AI-generated emails, a fake job description, and a fabricated “internal document” from OpenAI.

He had me… for thirty seconds, and then I thought about it.

The short version

A “recruiter” emailed me out of the blue about a developer relations role. This isn’t out of the the ordinary; this has happened before, and it’s happened a couple of times in the past couple of months.

However, this role stood out: it was Director of Developer Relations role at OpenAI. Remote-first, $230K–$280K base, Python-primary, and AI-focused. It was basically my dream job on paper.

Over the course of several emails, he asked for my resume and salary expectations while giving me nothing concrete in return: no company name, no hiring manager, no specifics.

When I finally got suspicious and asked three simple verification questions:

- Who’s your contact at OpenAI?

- Is this a retained or contingency search?

- What’s your formal relationship with the hiring organization?

He went silent for over a day, then came back with a wall of text that answered none of them.

Then came the real play: he told me that OpenAI required three purportedly “professional documents” before I could interview, and they had to be ready in the next 48 hours:

- An “Executive Impact Matrix,”

- A “Technical Leadership Competency Assessment,” and

- A “Cross-Functional Influence & Initiative Report”

The descriptions of these documents made it look as if they were complex and would take hours to prepare. The recruiter “helpfully” offered to connect me with a “specialist” who could prepare them for a fee.

None of these documents are real. No company asks for them. It’s a document preparation fee scam, and the whole weeks-long email exchange was just the runway to get me to that moment.

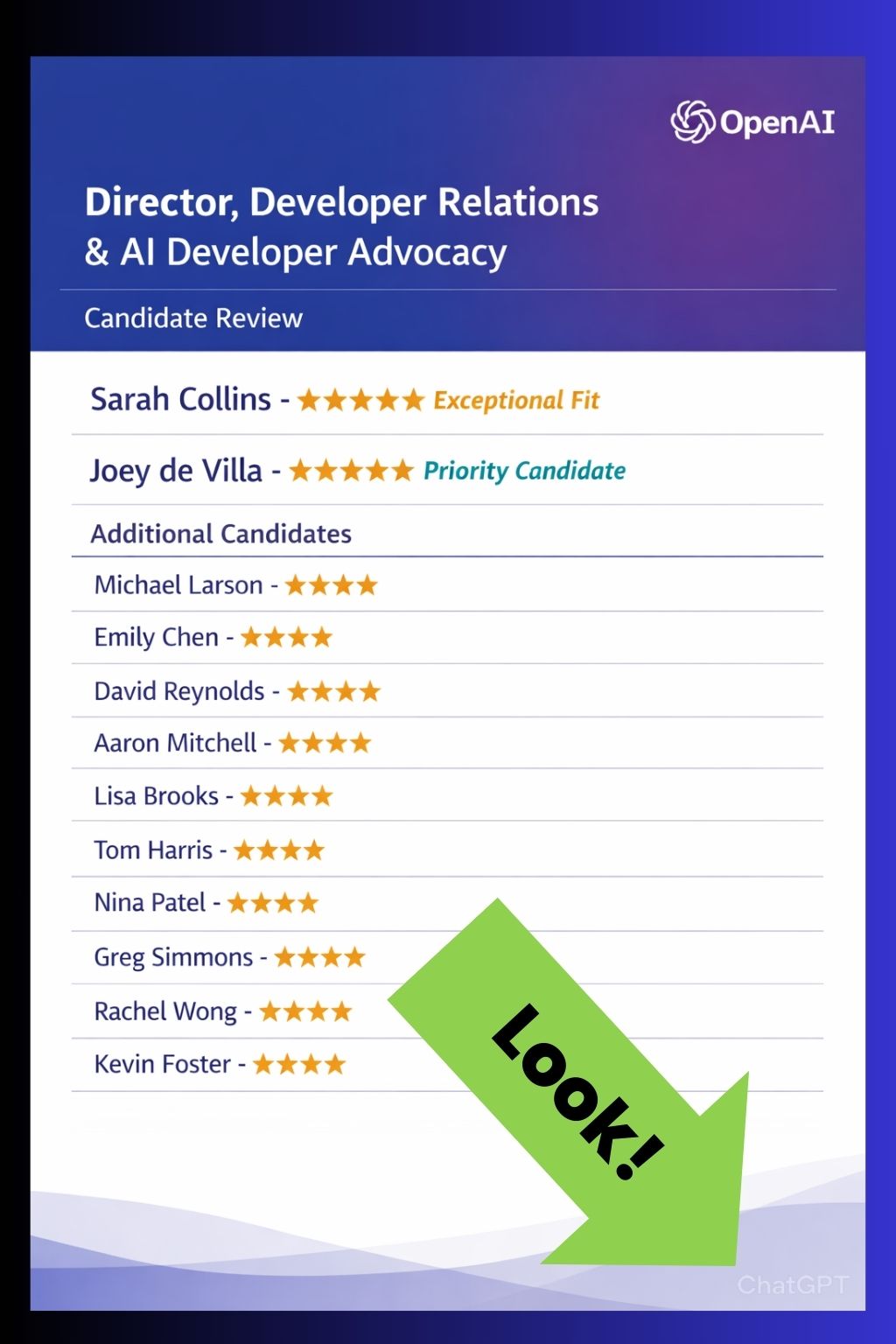

But the best part? When I didn’t bite, he followed up with a fake “OpenAI Candidate Review” document showing my name alongside other “candidates” with star ratings. This would be a massive HR violation if it were real:

But it wasn’t real! He generated it with ChatGPT. And he left behind evidence — the dumbass forgot to crop out the watermark.

How the AI gave him away

One of the most interesting things about this scam is how AI was both the scammer’s greatest tool and his undoing.

Every email he sent me was written in polished, flawless corporate English.

But in the one paragraph where he steered me toward paying the “specialist,” the grammar suddenly fell apart:

“a professional I have known for years that specialise in this kind of documents with many great and positive result.”

The AI wrote the con. But the human wrote the close. And the seam between the two is where the truth leaked out.

This is a pattern worth watching for. As AI-powered scams become more common, the tell is going to be a shift in quality at the moment where the scammer needs to speak in their own words. You’ll see well-written text, abruptly followed by different writing style marked by poor, non-idiomatic grammar (because they’re communicating with you in a language they don’t know well). Keep an eye out for that sudden transition.

The 3 questions real recruiters can answer

If you’re job searching right now and a recruiter reaches out, ask them these three questions:

- Who is your contact at the hiring company?

- Is this a retained or contingency search?

- What is your formal relationship with the hiring organization?

A real recruiter answers these in seconds. A fake one dodges, deflects, or disappears.

8 fake recruiter red flags

Based on my experience, here are eight things to watch out for:

- The job seems tailor-made for you. LLMs make it trivially easy to generate a convincing “JD” (job description) from someone’s LinkedIn profile. If it checks every single box, ask why.

- The information only flows one direction. They ask for your resume, salary, and preferences. They give you nothing concrete: no company name, no hiring manager, no search terms.

- The email footer doesn’t add up. Gmail addresses or mismatched domains, vague or incomplete street addresses, and an “alphabet soup” of certifications are all warning signs.

- They dodge verification questions. Real recruiters are proud of their client relationships. Fake ones ghost you when you ask for specifics.

- They ask you to pay for documents or preparation. No legitimate employer requires this. Ever. This is always the scam.

- Watch for the grammar shift. Polished emails that suddenly drop in quality when money enters the conversation? That’s AI-generated content with a human-written sales pitch sloppily stitched in.

- Check the metadata. If they send you an “official” document, look at every corner, every file property, every detail. Scammers are playing a numbers game, and as a result, they’re often rushed and sloppy. Sometimes they literally leave the watermark.

- The emotional setup is part of the scam. Flattery, validation, and the sense that someone finally sees your worth is intoxicating, especially when you’ve been job hunting for months. That’s by design. The best time to be skeptical is when you most want to believe.

Why this matters right now

This isn’t just my problem. It’s an epidemic:

- Job scams grew over 1,000% between May and July 2025, according to McAfee.

- Losses from recruitment fraud exceeded $500 million in 2024, according to the FTC.

- 6 in 10 job seekers encountered a fake recruiter in 2025, and 1 in 4 fell for a scam, according to a PasswordManager.com survey.

AI tools are making these scams more polished, more personalized, and harder to detect. The “spray and pray” emails with obvious typos are being replaced by tailored, multi-email campaigns that build trust over weeks before making their move.

If you’re job searching (or know someone who is), please share this post and the video. The more people know what to look for, the less effective these scams become.

Watch the video

Once again, here’s the video, where I walk through the entire scam step by step, from the first email to the ChatGPT watermark:

And if you haven’t already, subscribe to the Global Nerdy YouTube channel. There’s more coming soon, and I promise it’ll be less infuriating than this one. Probably.

Report it

If this has happened to you, here’s where to report it:

- FTC: reportfraud.ftc.gov

- FBI (IC3): ic3.gov

- LinkedIn: Report the profile directly through the platform

- Your state Attorney General: naag.org

And if you’ve got your own story about a fake recruiter, drop me a line on LinkedIn! Let’s make these scams harder to pull off.