I was the guest author of this week’s Startup Digest Tampa Bay newsletter, which was sent out on Monday. I was free to write about any topic that I thought subscribers to the newsletter might find interesting or useful, and I chose to write about reasons startup founders and members have to be optimistic even though we’re in a global pandemic and corresponding economic crisis.

I’d like to thank Techstars Tampa Bay, Startup Digest Tampa Bay curator Murewa Olubela, and Startup Digest Tampa Bay curator manager Alex Abell for inviting me to write for the newsletter!

Here’s “director’s cut” of my guest editorial, which has some additional information and ideas…

Reasons for startups to be optimistic

Rather than bore you with a long preamble, I’ll give your week a good start by getting straight to my point: The global pandemic comes with a global business crisis, and crises are where startups shine. Here are three reasons — each with a litany of sub-reasons — why startup founders and team members should be at least a little optimistic about the current situation.

1. Sometimes you don’t know that you’re living in a golden age

The first reason to be optimistic is that recessions have been known to hide golden ages. As far as the last recession is concerned, Thomas Friedman has a theory: That 2007 was “one of the single greatest technological inflection points since Gutenberg…and we all completely missed it.”

He made his point very compelling by listing what happened then in What the hell happened in 2007?, the second chapter of his 2016 book, Thank You for Being Late. I’ve compiled his list in the table below, expanded the scope to cover the years 2006 through 2008, and threw in some additional notes.

Looking at that time through the lens of the leaps in technology shown below, it seems like a golden age:

| The leap | Notes |

|---|---|

| Airbnb |

In October 2007, as a way to offset the high cost of rent in San Francisco, roommates Brian Chesky and Joe Gebbia came up with the idea of putting an air mattress in their living room and turning it into a bed and breakfast. They called their venture AirBedandBreakfast.com, which later got shortened to its current name. This marks the start of the modern web- and app-driven gig economy. |

| Android |

The first version of Android as we know it was announced on September 23, 2008 on the HTC Dream (also sold as the T-Mobile G1). Originally started in 2003 and bought by Google in 2005, Android was at first a mobile operating system in the same spirit as Symbian or more importantly, Windows Mobile — Google was worried about competition from Microsoft. The original spec was for a more BlackBerry-like device with a keyboard, and did not account for a touchscreen. This all changed after the iPhone keynote. |

| App Store |

Apple’s App Store launched on July 10, 2008 with an initial 500 apps. At the time of writing (March 2020), there should be close to 2 million. In case you don’t remember, Steve Jobs’ original plan was to not allow third-party developers to create native apps for the iPhone. Developers were directed to create web apps. The backlash prompted Apple to allow developers to create apps, and in March 2008, the first iPhone SDK was released. |

| Azure | Azure, Microsoft’s foray into cloud computing, and the thing that would eventually bring about its turnaround after Steve Ballmer’s departure, was introduced at their PDC conference in 2008 — which I attended on the second week of my job there. |

| Bitcoin |

The person (or persons) going by the name “Satoshi Nakamoto” started working on the Bitcoin project in 2007. It would eventually lead to cryptocurrency mania, crypto bros, HODL and other additions to the lexicon, one of the best Last Week Tonight news pieces, and give the Winklevoss twins their second shot at technology stardom after their failed first attempt with a guy named Mark Zuckerberg. |

| Chrome |

By 2008, the browser wars were long done, and Internet Explorer owned the market. Then, on September 2, Google released Chrome, announcing it with a comic illustrated by Scott “Understanding Comics” McCloud, and starting the Second Browser War. When Chrome was launched, Internet Explorer had about 70% of the browser market. In less than 5 years, Chrome would overtake IE. |

| Data: bandwidth costs and speed | In 2007, bandwidth costs dropped dramatically, while transmission speeds grew in the opposite direction. |

| Dell returns | After stepping down from the position of CEO in 2004 (but staying on as Chairman of the Board), Michael Dell returned to the role on January 31, 2007 at the board’s request. |

| DNA sequencing costs drop dramatically | The end of the year 2007 marks the first time that the cost of genome sequencing dropped dramatically — from the order of tens of millions to single-digit millions. Today, that cost is about $1,000. |

| DVD formats: Blu-Ray and HD-DVD | In 2008, two high-definition optical disc formats were announced. You probably know which one won. |

| In September 2006, Facebook expanded beyond universities and became available to anyone over 13 with an email address, making it available to the general public and forever altering its course, along with the course of history. | |

| Energy technologies: Fracking and solar | Growth in these two industries helped turn the US into a serious net energy provider, which would help drive the tech boom of the 2010s. |

| GitHub | Originally founded as Logical Awesome in February 2008, GitHub’s website launched that April. It would grow to become an indispensable software development tool, and a key part of many developer resumes (mine included). It would first displace SourceForge, which used to be the place to go for open source code, and eventually become part of Microsoft’s apparent change of heart about open source when they purchased the company in 2018. |

| Hadoop |

In 2006, developer Doug Cutting of Apache’s Nutch project, took used GFS (Google File System, written up by Google in 2003) and the MapReduce algorithm (written up by Google in 2004) and combined it with the dataset tech from Nutch to create the Hadoop project. He gave his project the name that his son gave to his yellow toy elephant, hence the logo. By enabling applications and data to be run and stored on clusters of commodity hardware, Hadoop played a key role in creating today’s cloud computing world. |

| Intel introduces non-silicon materials into its chips | January 2007: Intel’s PR department called it “the biggest change to computer chips in 40 years,” and they may have had a point. The new materials that they introduced into the chip-making process allowed for smaller, faster circuits, which in turn led to smaller and faster chips, which are needed for mobile and IoT technologies. |

| Internet crosses a billion users | This one’s a little earlier than our timeframe, but I’m including it because it helps set the stage for all the other innovations. At some point in 2005, the internet crossed the billion-user line, a key milestone in its reach and other effects, such as the Long Tail. |

| iPhone |

On January 9, 2007, Steve Jobs said the following at this keynote: “Today, we’re introducing three revolutionary new products…an iPod, a phone, and an internet communicator…Are you getting it? These are not three separate devices. This is one device!” The iPhone has changed everyone’s lives, including mine. Thanks to this device, I landed my (current until recently) job, and right now, I’m working on revising this book. |

| iTunes sells its billionth song | On February 22, 2006, Alex Ostrovsky from West Bloomfield, Michigan purchased ColdPlay’s Speed of Sound on iTunes, and it turned out to be the billionth song purchased on that platform. This milestone proves to the music industry that it was possible to actually sell music online, forever changing an industry that had been thrashing since the Napster era. |

| Kindle |

Before tablets or large smartphone came Amazon’s Kindle e-reader, which came out on November 19, 2007. It was dubbed “the iPod of reading” at the time. You might not remember this, but the first version didn’t have a touch-sensitive screen. Instead, it had a full-size keyboard below its screen, in a manner similar to phones of that era. |

| Macs switch to Intel |

The first Intel-based Macs were announced on January 10, 2006: The 15″ MacBook Pro and iMac Core Duo. Both were based on the Intel Core Duo. Motorola’s consistent failure to produce chips with the kind of performance that Apple needed on schedule caused Apple to enact their secret “Plan B”: switch to Intel-based chips. At the 2005 WWDC, Steve Jobs revealed that every version of Mac OS X had been secretly developed and compiled for both Motorola and Intel processors — just in case. We may soon see another such transition: from Intel to Apple’s own A-series chips. |

| Netflix | In 2007, Netflix — then a company that mailed rental DVDs to you — started its streaming service. This would eventually give rise to binge-watching as well as one of my favorite technological innovations: Netflix and chill (and yes, there is a Wikipedia entry for it!), as well as Tiger King, which is keeping us entertained as we stay home. |

| Python 3 |

The release of Python 3 — a.k.a. Python 3000 — in December 2008 was the beginning of the Second Beginning! While Python had been eclipsed by Ruby in the 2000s thanks to Rails and the rise of MVC web frameworks and the supermodel developer, it made its comeback in the 2010s as the language of choice for data science and machine learning thanks to a plethora of libraries (NumPy, SciPy, Pandas) and support applications (including Jupyter Notebooks). I will always have an affection for Python. I cut my web development teeth in 1999 helping build Givex.com’s site in Python and PostgreSQL. I learned Python by reading O’Reilly’s Learning Python while at Burning Man 1999. |

| Shopify | In 2004, frustrated with existing ecommerce platforms, programmer Tobias Lütke built his own platform to sell snowboards online. He and his partners realize that they should be selling ecommerce services instead, and in June 2006, launch Shopify. |

| Spotify | The streaming service was founded in April 2006, launched in October 2008, and along with Apple and Amazon, changed the music industry. |

| Surface (as in Microsoft’s big-ass table computer) |

Announced on May 29, 2007, the original Surface was a large coffee table-sized multitouch-sensitive computer aimed at commercial customers who wanted to provide next generation kiosk computer entertainment, information, or services to the public. Do you remember SarcasticGamer’s parody video of the Surface? |

| Switches | 2007 was the year that networking switches jumped in speed and capacity dramatically, helping to pave the way for the modern internet. |

|

In 2006, Twittr (it had no e then, which was the style at the time, thanks to Flickr) was formed. From then, it had a wild ride, including South by Southwest 2007, when its attendees — influential techies — used it as a means of catching up and finding each other at the conference. @replies appeared in May 2007, followers were added that July, hashtag support in September, and trending topics came a year later. Twitter also got featured on an episode of CSI in November 2007, when it was used to solve a case. |

|

| VMWare | After performing poorly financially, the husband and wife cofounders of VMWare — Diane Greene, president and CEO, and Mendel Rosenbaum, Chief Scientist — left. Greene was fired by the board in July, and Rosenbaum resigned two months later. VMWare would go on to experience record growth, and its Hypervisors would become a key part of making cloud computing what it is today. |

| Watson | IBM’s Watson underwent initial testing in 2006, when Watson was given 500 clues from prior Jeopardy! programs. Wikipedia will explain the rest:

|

| Wii | The Wii was released in December 2006, marking Nintendo’s comeback in a time when the console market belonged solely to the PlayStation and Xbox. |

| XO computer | You probably know this device better as the “One Laptop Per Child” computer — the laptop that was going to change the world, but didn’t quite do that. Still, its form factor lives on in today’s Chromebooks, which are powered by Chrome (which also debuted during this time), and the concept of open source hardware continues today in the form of Arduino and Raspberry Pi. |

| YouTube |

YouTube was purchased by Google in October 2006. In 2007, it exploded in popularity, consuming as much bandwidth as the entire internet did 7 years before. In the summer and fall of 2007, CNN and YouTube produced televised presidential debates, where Democratic and Republican US presidential hopefuls answered YouTube viewer questions. You probably winced at this infamous YouTube video, which was posted on August 24, 2007: Miss Teen USA 2007 – South Carolina answers a question, which has amassed almost 70 million views to date. |

How did most of us miss all this? Friedman says that it’s because our collective attention was directed toward the credit crunch of 2008, which he calls “the deepest recession since the crash of 1929.”

Back then, everybody compared the financial collapse of that time to the stock market crash of ’29. Now that we’re in the middle of a pandemic, 2008 has become the new benchmark for economic catastrophe. As founders, entrepreneurs, and technologists, there’s a good chance that you’re already asking this question: Is there a chance that the current situation is also hiding a golden age for technology and startups?

In case you think that the golden age of 2006 – 2008 was just an outlier, here are a few examples from previous crises:

- Thomas Edison founded the company that would eventually become General Electric during an economic slump brought about by the Baring Crisis and “the world’s first bailout”, whose effects were felt worldwide, and it took an international consortium and a number of Rothschilds to prevent an economic catastrophe. In the 1960s, one of the few companies in the 1960s making computers and operating systems whose influence extends to this very day (GE, along with Bell Labs and MIT, made Multics, which would inspire the creation of Unix).

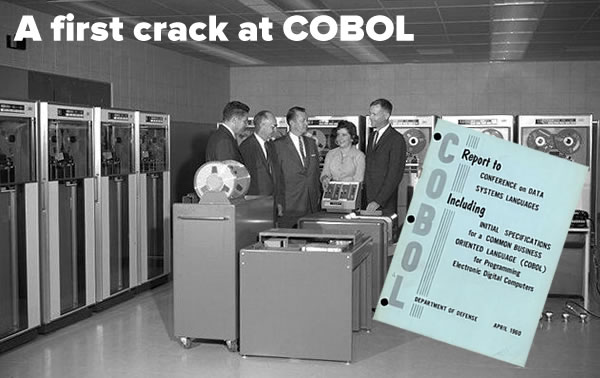

- The Tabulating Machine Company, which would evolve into IBM was born during The Panic of 1896, a sequel to the Panic of 1893. This was a time when the unemployment rate climbed as high at 15%. IBM is still a big player in mainframes, which are in the news again, thanks in part to the pandemic.

- Apple and Microsoft came about in the mid-1970s, just after the 1973 – 1975 recession, the oil crisis of 1973, the Nixon shock, and the end of the gold standard and Bretton Woods, and the start of the U.S. dollar being a fiat currency (a term that you’re probably familiar with with you even just dabble in cryptocurrencies). You’re probably quite familiar with what these companies went on to do.

2. Startups that last get founded during downturns

Putting aside the chance that I might be a victim of survivorship bias, there are a number of reasons why a recession or economic downturn is the optimal time to create or join a startup:

- New situations create new needs: Just look at Zoom’s fortunes right now. What other needs has the “New Normal” created, in both the short and long term?

- Available brainpower: With an economic downturn comes increased unemployment, which means that the talent you need for your startup is more likely to be available (this pool of people includes myself). This brainpower can be key to a startup’s success.

- Available startups: If you’re not looking to be a founder and are looking for a place to work, you may find a number of founders looking to create their own company, often because they can’t find employment themselves.

- Recession pricing: The price of goods and services tends to drop during downturns, which is an advantage to a company that’s trying to operate “lean and mean.” There may also be some savings opportunities in other developments, including the drop in interest rates and other economic stimuli, as well as companies selling off assets that startups may find useful. You may even find it easier to get coverage, as the media will be looking for some “good news” stories to tell.

- Chaos: Your likely competition — large, established players — are probably in disarray or too focused on survival or reorganizing to take notice of you. A business that’s lean and nimble is better-positioned to navigate the changes that a downturn brings about, and better able to take advantage of the eventual turn-around.

- Pressure makes diamonds: There’s no comfort zone in a downturn! A company that starts during one has a culture of resilience and grit baked into its DNA, and the lessons that risk and non-terminal failure during difficult times teaches makes for a powerful team. The “war stories” that come about from shared challenges also make for a loyal team.

A downturn doesn’t guarantee success for a startup, but it’s a crucible that can strengthen one.

3. We’re in the middle of a number of natural experiments

The final item in this least of reasons to be optimistic is that we’re in a set of unprecedented natural experiments — that is, we’re witnessing “what if” scenarios that are no longer hypothetical. In these scenarios, there are object lessons, opportunities, and problems that the right startup idea could solve or ameliorate.

- Internet bandwidth: One of the major arguments that telcos have given us for bandwidth caps was that without this sort of control (they never mention the associated cash-grab), the internet would become so congested as to be unusable. With the pandemic, telcos have lifted bandwidth caps, and the internet still works, even with the additional usage from being home-bound as well as Tiger King.

- Remote work: In recent years, Yahoo! and IBM famously ended their remote work policies, which led other, smaller organizations to consider doing the same. With “safer at home” measures, we’re all global participants in a remote work experiment, and to the surprise of doubters, it seems to be working. Remote work is likely to be a fixture of office life even after this crisis, and this change will create a need for new and expanded services and technologies.

- Remote school: Just as working people are being subjected to a natural experiment, so are students and teachers, who are being thrown into the deep end with distance-learning tools and technologies. There are a number of challenges to overcome, such as usability, adjusting teaching and learning styles, bringing it to students who can’t afford internet access or the right technology at home, to the disruption that this brings to students’ and teachers’ lives, and to the school curriculum in general.

- Unemployment: I’m in this category, as one of at least 10 million people in the U.S. who’ve suddenly found themselves without work. What happens when this many people are jobless, in a world with ubiquitous connectivity and computing? Remember, the smartphone as we know it was in the hands of a small number of people in 2008, while 4 out of 5 adults in the U.S. has a smartphone today. How can a startup help them get back to work?

- Additional online learning: You may have seen the advice in news stories or online: If you’ve been laid off, this is the perfect time to take an online course and “upskill.” With record numbers of people applying for unemployment assistance, we’re seeing a strong uptick in online course enrollments.

- Business and government systems under strain: While the internet seems to be handling the increase in use, other systems have been put under strain by the pandemic. The hospitality industry has largely been shut down. Supply chains are being stressed. Our pandemic response infrastructure was already gutted before the pandemic struck. Our governments are unprepared in all sorts of ways, from a piecemeal response to the pandemic, to aging, COBOL-powered systems unprepared (and in Florida’s case, unprepared by design) to process the massive influx of requests for unemployment assistance. This sounds like a job for a startup!

- Healthcare: The United States remains the only industrialized nation without universal healthcare. To my Canadian-raised mind, this is baffling; to many Americans, universal healthcare is an unaffordable luxury. The U.S. government’s ability to “magic up” trillions of dollars to stimulate the economy (or at least Ruth’s Chris Steak House) on incredibly short notice proves that if the political will existed, it could choose to bankroll universal healthcare. With 1 in 4 Americans expected to be unemployed and healthcare insurance generally being tied to employment, universal healthcare is no longer as “unthinkable” an idea as it once was.

- 3D printing’s first mass test: We’ve seen 3D printing useful in one-off situations (including a time when they needed a specific kind of wrench on the International Space Station), but with volunteers creating large numbers of face shields, masks, and even ventilator parts and adapters, this is 3D printing’s first at-scale test. The lessons from this effort have yet to be learned, and what we learn could launch printing into its next phase.

- Media and communications: This is the first worldwide crisis where publishers, from the largest media empire to individual vloggers, have become much relied-on sources of both information and misinformation. We’re not done seeing the full extent of their effects yet.

- Social systems put to the test: The disruption of normal life, including staying at home to social distancing, has resulted in widely different responses, from science-based to conspiracy theory-based. The major social media players have put in some measures to fight the spread of accidentally or deliberately incorrect information. I have no doubt that even nation-states are playing the misinformation game; after all, the saying is “never let a good crisis go to waste.”

- New political movements: The economic downturn of 2008 left a lot of people dislocated and in dire situations from which they still haven’t recovered, giving birth to a new populism, a willingness to follow brutish, xenophobic, and nationalistic leaders, and movements like Brexit and MAGA. What will this new situation — one brought about at least partially by the movements that arose after 2008 — bring?

- Emerging cultures of control: I’m going to end this list on a slightly darker note, in spite of my general optimism. We’re already seeing signs of an emerging hygiene culture and an awareness of the importance of hand-washing, which is good. Perhaps there are startup opportunities that might come about from people being more aware of the power of microorganisms and viruses (viruses are technically “not alive”, and exist in their own category). More worrisome are other cultures of control, namely those of surveillance and authoritarianism, which are also rearing their heads during this crisis. Let’s take care so that the things we create don’t turn the world into another Black Mirror episode.