One of yesterday’s top stories on Reddit was Michael McDonough’s essay, The Top 10 Things They Never Taught Me in Design School. Here’s a quick run-down of those top ten things:

- Talent is one-third of the success equation.

- 95 percent of any creative profession is shit work.

- If everything is equally important, then nothing is very important.

- Don’t over-think a problem.

- Start with what you know; then remove the unknowns.

- Don’t forget your goal.

- When you throw your weight around, you usually fall off balance.

- The road to hell is paved with good intentions; or, no good deed goes unpunished.

- It all comes down to output.

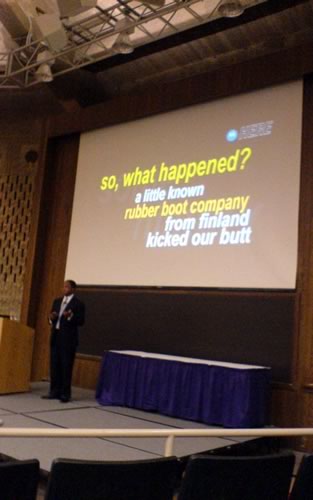

- The rest of the world counts.

Software development is a kissing cousin of engineering (if not an engineering discipline itself), and blends creativity with math and science. That’s why I find that a lot of advice to creative types is also applicable to software developers. Andrés Taylor of ThoughtWorks seems think so, and was inspired by The Top 10 Things They Never Taught Me in Design School to write his own piece titled Top ten things ten years of professional software development has taught me. Here’s his list — all of which I consider sound advice — with some excerpts of his explanations.

- Object orientation is harder than you think. “t turns out that it’s pretty hard. Ten years later, I’m still learning how to model properly. I wish I spent more time reading up on OO and design patterns. Good modeling skills are worth a lot to every development team.”

- The difficult part of software development is communication. “And that’s communication with persons, not socket programming. Now and then you do run into a tricky technical problem, but it’s not at all that common. Much more common is misunderstandings between you and the project manager, between you and the customer and finally between you and the other developers. Work on your soft skills.”

- Learn to say no. “When I started working, I was very eager to please. This meant that I had a hard time saying no to things people asked of me. I worked a lot of overtime, and still didn’t finish everything that was asked of me. The result was disappointment from their side, and almost burning out on my part. If you never say no, your yes is worth very little.”

- If everything is equally important, then nothing is important. “The business likes to say that all the features are as crucial. They are not. Push back and make them commit. It’s easier if you don’t force them to pick what to do and what not to do. Instead, let them choose what you should do this week. This will let you produce the stuff that brings value first. If all else goes haywire, at least you’ve done that.”

- Don’t over-think a problem. “I don’t mean to say you shouldn’t design at all, just that the implementation will quickly show me stuff I didn’t think of anyway, so why try to make it perfect? Like Dave Farell says: ‘The devil is in the details, but exorcism is in implementation, not theory.'”

- Dive really deep into something, but don’t get hung up. “Chris and I spent a lot of time getting into the real deep parts of SQL Server. It was great fun and I learned a lot from it, but after some time I realized that knowing that much didn’t really help me solve the business’ problems.”

- Learn about the other parts of the software development machine. “It’s really important to be a great developer. But to be a great part of the system that produces software, you need to understand what the rest of the system does. How do the QA people work? What does the project manager do? What drives the business analyst? This knowledge will help you connect with the rest of the people, and will grease interactions with them. Ask the people around you for help in learning more. What books are good? Most people will be flattered that you care, and willingly help you out. A little time on this goes a really long way.”

- Your colleagues are your best teachers. “A year after I started on my first job, we merged with another company. Suddenly I had a lot of much more talented and experienced people around me. I remember distinctly how this made me feel inferior and stupid…Nowadays, working with great people doesn’t make me feel bad at all. I just feel I have the chance of a lifetime to learn. I ask questions and I try really hard to understand how my colleagues come to the conclusions they do…See your peers as an asset, not competition.”

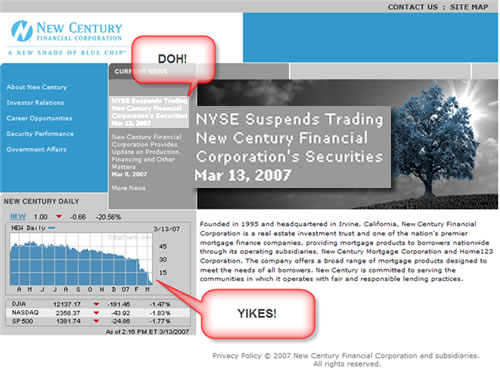

- It all comes down to working software. “No matter how cool your algorithms are, no matter how brilliant your database schema is, no matter how fabulous your whatever is, if it doesn’t scratch the clients’ itch, it’s not worth anything.”

- Some people are assholes. “People that because of something or other are plain old mean. Demeaning bosses. Lying colleagues. Stupid, ignorant customers. Don’t take this too hard. Try to work around them and do what you can to minimize the pain and effort they cause, but don’t blame yourself. As long as you stay honest and do your best, you’ve done your part.”